Depot container builds

Building a Docker image using Depot is up to 40x faster than on your local machine or CI provider. See a live benchmark.

At a high level, here's what happens when you run depot build:

- We send your build context to a remote builder instance running an optimized BuildKit implementation.

- BuildKit performs the build and sends the resulting image back to your machine or a remote registry (according to the options you passed in the build command).

- Your Depot project's persistent cache stores the resulting layer cache from the build automatically.

- Your team or CI provider accesses the persistent cache for subsequent builds.

Switching to Depot

Switching to Depot for your container builds is usually a one-line code change: replace docker build with depot build. For example, if you're running build or bake commands locally, you can switch to using the same commands with the Depot CLI:

# to replace docker build

depot build -t my-image:latest --platform linux/amd64,linux/arm64 .

# to replace docker bake

depot bake -f docker-bake.hclThe depot build command accepts the same arguments as docker build, so you can use it in your existing workflows without any changes. Depot's build infrastructure for container builds requires zero configuration and automatically includes a persistent build cache and support for native multi-platform image builds.

To see a Depot container build in action, try the quickstart.

Use cases for Depot container builds

Depot container builds benefit any team that builds and ships containerized applications. Opportunities to optimize your development workflows include:

-

Building your Docker image is slow in CI: Many CI providers force you to save and load your Docker layer cache via bulky tarballs over slow, flaky networks. Sometimes CI providers offer limited resources like slower CPUs, memory, and disks that result in longer overall build times.

Depot works within your existing workflows: When you replace

docker buildwithdepot build, your Docker layer cache is automatically persisted to a fast NVMe SSD and your build runs on a remote BuildKit host. You don't need to save and load layer cache over networks. For more information, see Continuous integration. -

You're building images for multiple platforms or multiple architectures: Maybe you're stuck with managing your own build runner or relying on slow emulation to build multi-platform images (x86 and ARM). For example, CI providers usually run their workflows on Intel (x86) machines. If you want to create a Docker image for ARM, you either have to launch your own BuildKit builder for ARM and connect to it from your CI provider, or build your ARM image with slow QEMU emulation.

Depot can build multi-platform and ARM images natively with zero emulation and without running additional infrastructure.

-

Building your Docker image on your local machine is slow or expensive: Docker can hog resources on developer machines, taking up valuable network, CPU, and memory resources.

Depot executes builds on remote compute infrastructure and the remote builder instance takes care of the CPU, memory, disk, and network resources required for your builds. Learn more about local development with Depot.

-

You want to share build results with a team: Without shared infrastructure, each developer and CI job has to rebuild Docker layers that teammates have already built, wasting time and compute resources.

Depot projects provide persistent, remotely accessible build caches. Your whole team can send builds to the same project and automatically reuse each other's Docker layer cache. If your coworker has already built an image, your

depot buildcommand for the same image reuses those layers without rebuilding them.

Feature summary

The following table summarizes the key features of Depot container builds.

| Build isolation and acceleration |

|

| Native x86 and ARM builds |

|

| Persistent shared caching |

|

| Drop-in replacement |

|

| CI provider integrations |

|

| Dev tools integrations |

|

| Build autoscaling |

|

| Depot Registry |

|

| Build metrics |

|

How Depot container builds work

Understand the core concepts of Depot container builds.

Projects

A project in Depot is a cache namespace that's isolated from other projects in your organization at a cache and hardware level. You can use a project for a single application, git repository, or Dockerfile.

All your container builds run within a project. You can create multiple projects within an organization to manage your container builds.

Once you create a project, you can use Depot container builds from your local machine or an existing CI workflow by swapping docker for depot.

Builds

Builder instances are ephemeral. They terminate after each build. Your build cache is persistent and remains available across builds. Within a project, every new builder instance uses the project's persistent cache.

Builder instances

Builder instances run an optimized version of BuildKit.

Builder instances come in the following sizes, configurable by project:

| Builder size | CPUs | Memory | Plans |

|---|---|---|---|

| Default | 16 | 32 GB | All |

| Large | 32 | 64 GB | Startup and Business |

| Extra large | 64 | 128 GB | Startup and Business |

For pricing by size, see Builder instance pricing by size.

By default, all builds for a project route to a single builder instance per architecture you're building. Depot can build images on both x86 and ARM machines supporting the following platforms:

linux/amd64linux/arm64linux/ARM/v6linux/ARM/v7linux/386

Both architectures build native multi-platform images with zero emulation.

Open-source repository build isolation

For open-source repositories, builds launched by fork pull requests are isolated to ephemeral builder instances with no read or write access to the project cache. Isolating these builds prevents cache poisoning and protects sensitive data from untrusted code. You'll see these types of builds labeled as isolated in the Depot dashboard.

Cache

By default, the image built on a Depot builder instance stays in your project's persistent build cache for reuse in future builds.

You can also download the image to your local Docker daemon with depot build --load or push it directly to a registry with depot build --push. When you use --push, we push the image directly from the remote builder instance to your registry over high-speed network links, not through your local network. Learn more about pushing to private Docker registries like Amazon ECR or Docker Hub.

Configurable cache policies per project

When you trigger a build and the cache is full, BuildKit automatically removes layers based on two configurable policies:

- Cache Retention Policy: Cache layers older than 7, 14, or 30 days are automatically removed.

- Cache Storage Policy: When the cache volume reaches the set limit, the least recently used layers are removed first. The default cache size for a Depot project is 50 GB. You can specify a cache storage policy up to 1000 GB in your project settings.

Configure these settings in the project settings page. Removing layers won't cause builds to fail, the layers get rebuilt on the next build that needs them.

Cache removal criteria

BuildKit stores layers as differentials, where each layer depends on underlying layers. BuildKit won't remove an underlying layer that a recently used layer depends on. Those underlying layers remain in the cache even when they haven't been directly accessed within the retention policy timeframe. For example, base image layers often appear as "last used over a month ago", but are retained in the cache because other layers depend on them. For the same reason, it's possible that your cache size exceeds your cache storage policy setting.

To fully remove parent layers, click Reset cache in the Danger Area section of the project settings page.

Deep dive: Technical architecture

A deeper dive into the design and architecture of Depot container builds.

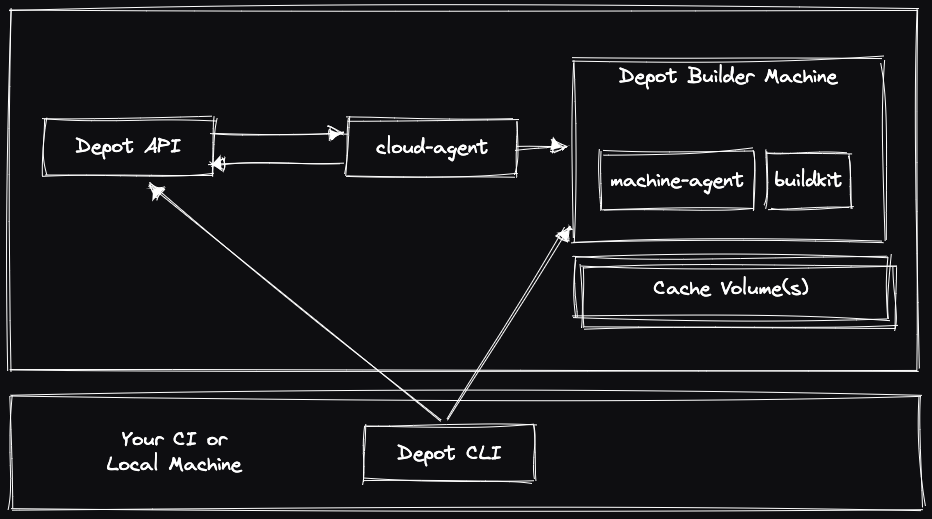

The architecture for Depot remote container builds consists of the Depot CLI, a control plane, an open-source cloud-agent, and builder instances (virtual machines) running our open-source machine-agent and BuildKit with associated cache volumes.

The flow of a Docker image build using Depot looks like this:

- The Depot CLI asks the Depot API for a new builder machine connection (with organization ID, project ID, and the required architecture) and polls the API for when a machine is ready.

- The Depot API stores the pending request for a builder.

- A

cloud-agentprocess periodically reports the current status to the Depot API and asks for any pending infrastructure changes. - For a pending build, the

cloud-agentprocess receives a description of the machine to start and then launches it. - When the machine launches, a

machine-agentprocess running inside the virtual machine registers itself with the Depot API and receives the instruction to launch BuildKit with specific mTLS certificates provisioned for the build. - After the

machine-agentreports that BuildKit is running, the Depot API returns a successful response to the Depot CLI, along with new mTLS certificates to secure and authenticate the build connection. - The Depot CLI uses the new mTLS certificates to directly connect to the builder instance, using that machine and cache volume for the build.

We use the same architecture for Depot Managed builders, the only difference being where the cloud-agent and builder virtual machines launch.

Pricing

Container builds are tracked by the second, with no one-minute minimum per build. We calculate total minutes used at the end of the billing period.

| Developer plan | Startup plan | Business plan | |

|---|---|---|---|

| Plan cost | $20/month | $200/month | Custom |

| Build minutes | 500 included | 5,000 included + $0.04/minute after | Custom |

| Cache | 25 GB included + $0.20/GB/month after | 250 GB included + $0.20/GB/month after | Custom |

All plans include:

- Unlimited concurrency

- Native multi-platform builds

- Default builder instances with 16 CPUs and 32 GB of memory

Plans also include GitHub Actions runner minutes. See Pricing for more about plan features and costs.

Builder instance pricing by size

Startup and Business plan customers can configure larger builder instances. Large and Extra large builder instances consume your included build minutes faster (via multipliers) and cost more per minute.

| Builder size | CPUs | Memory | Per-minute price | Minutes multiplier |

|---|---|---|---|---|

| Default | 16 | 32 GB | $0.004 | 1x |

| Large | 32 | 64 GB | $0.008 | 2x |

| Extra large | 64 | 128 GB | $0.016 | 4x |

Additional container build minutes

Startup and Business plan customers can pay for additional container build minutes on a per-minute basis.

FAQ

How many builds can a project run concurrently?

You can run unlimited concurrent builds against a single Depot project. By default, all builds for the same architecture run on a single VM with fixed resources (16 CPUs, 32 GB RAM), so concurrent builds share these resources. To give each build dedicated resources, enable autoscaling in your project settings. For more information, see Build parallelism in Depot.

How do I push my images to a private registry?

You can use the --push flag to push your images to a private registry. Our depot CLI uses your local Docker

credentials provider. So, any registry you've logged into with docker login or similar will be available when

running a Depot build. For more information, see Private

registries.

Can I build Docker images for M4 macOS devices?

Yes! Depot supports native ARM container builds out of the box. We detect the architecture of the machine requesting a

build via depot build. If that architecture is ARM, we route the build to a builder running ARM natively. You can

build Docker images for M4 macOS devices and run the resulting image immediately, as it is made specifically for your

architecture. Learn more about building Docker ARM images with

Depot.

Can I build multi-platform Docker images?

Yes! Check out our integration guide.

How should I use Depot with a monorepo setup?

If you're building multiple images from a single monorepo, and the builds are lightweight, we tend to recommend using a single project. But we detail some other options in our monorepo guide.

Can I use Depot with my existing `docker build` or `docker buildx build` commands?

Yes! We have a depot configure-docker command that configures Depot as

a plugin for the Docker CLI and sets Depot as the default builder for both docker build and docker buildx build.

For more information, see our Docker build docs.

What are these extra files in my registry?

Registries like Amazon Elastic Container Registry (ECR) and Google Container Registry (GCR) don't accurately display provenance information for a given image. Provenance is a set of metadata that describes how an image was built. This metadata is stored in the registry alongside the image. It's enabled by default in docker build and thus by default in depot build as well.

If you would like to clean up the clutter, you can run your build with --provenance=false:

depot build -t <your-registry> --push --provenance=false .Does Depot support building images in any lazy-pulling compatible formats like estargz, nydus, or others?

Depot supports building images in any lazy-pulling compatible format. You can build an estargz image by setting the --output flag at build time:

depot build \

--output "type=image,name=repo/image:tag,push=true,compression=estargz,oci-mediatypes=true,force-compression=true" \

.Does Depot support building images with zstd compression?

Depot supports building images with zstd compression, a popular compression format to help speed up the launching of containers in AWS Fargate and Kubernetes. You can build an image with zstd compression by setting the --output flag at build time:

depot build \

--output type=image,name=$IMAGE_URI:$IMAGE_TAG,oci-mediatypes=true,compression=zstd,compression-level=3,force-compression=true,push=true \

.Why is my build labeled as "isolated"?

We label builds as isolated in the Depot dashboard when they're launched by GitHub Actions for an open-source pull

request. It's a build that didn't have access to read from or write to the project cache, to prevent untrusted code

from accessing sensitive data.

Next steps

- Quickstart for Depot container builds

- Optimal Dockerfiles for Depot container build cache

- Local development with Depot container builds