# Depot Documentation

## Authentication

---

title: Authentication

ogTitle: Authentication for Depot remote caching

description: Learn how to authenticate with Depot remote caching

---

Depot Cache supports authenticating with user tokens and organization tokens. Additionally, [Depot-managed GitHub Actions runners](/docs/github-actions/overview) are pre-configured with single-use job tokens.

## Token types

- **User tokens** are used to authenticate as a specific user and can be generated from your [user settings](/settings) page.

- **Organization tokens** are used to authenticate as an organization. These tokens can be generated from your organization's settings page.

- **Depot GitHub Actions runners** are pre-configured with single-use job tokens. If you are using the automatic Depot Cache integration with Depot runners, you do not need to manually configure authentication.

## Configuring build tools

For specific details on how to configure your build tools to authenticate with Depot Cache, refer to the following guides:

- [Bazel](/docs/cache/reference/bazel)

- [Go](/docs/cache/reference/gocache)

- [Gradle](/docs/cache/reference/gradle)

- [Pants](/docs/cache/reference/pants)

- [sccache](/docs/cache/reference/sccache)

- [Turborepo](/docs/cache/reference/turbo)

## Depot Cache

---

title: Depot Cache

ogTitle: Overview of Depot remote caching

description: Learn how to use Depot remote cache for exponentially faster builds for tools like Bazel, Go, Turborepo, sccache, Pants, and Gradle.

---

import {CacheToolLogoGrid} from '~/components/docs/CacheToolLogoGrid'

**Depot Cache** is our remote caching service that speeds up your builds by providing incremental builds and accelerated tests, both locally and inside of your favorite CI provider.

One of the biggest benefits of adopting advanced build tools like Bazel is the ability to build only the parts of your codebase that have changed. Or, in other words, incremental builds. This is done by reusing previously built artifacts that have not changed via a build cache.

## Supported tools

Depot Cache integrates with build tools that support remote caching like Bazel, Go, Turborepo, sccache, Pants, and Gradle. For information about how to configure each tool to use Depot Cache, see the tool documentation:

Don't see a tool that supports remote caching that you use? Let us know in our [Discord

Community](https://discord.gg/MMPqYSgDCg)!

## How does it work?

Supported build tools can be configured to use Depot Cache, so that they store and retrieve build artifacts from Depot's remote cache. That cache can then be used from local development environments, CI/CD systems, or anywhere else you run your builds.

This speeds up your builds and tests by orders of magnitude, especially for large codebases, as those builds and tests become incremental. Instead of always having to rebuild from scratch, only the parts of your codebase that have changed are rebuilt, and only affected tests are re-run.

## Where can I use Depot Cache?

Depot Cache is accessible anywhere you run your builds, in local development or from any CI/CD system. Additionally, all supported tools are pre-configured to use Depot Cache when using [Depot GitHub Actions Runners](/docs/github-actions/overview).

This means that build artifacts are shared between different members of your team and sequential CI/CD jobs, making these builds and tests incremental.

## Pricing

Depot Cache is available on all of our pricing plans. Each plan includes a block of cache storage. Each additional GB over the included amount is billed at **$0.20/GB/month**. See our [pricing page](/pricing) for more details.

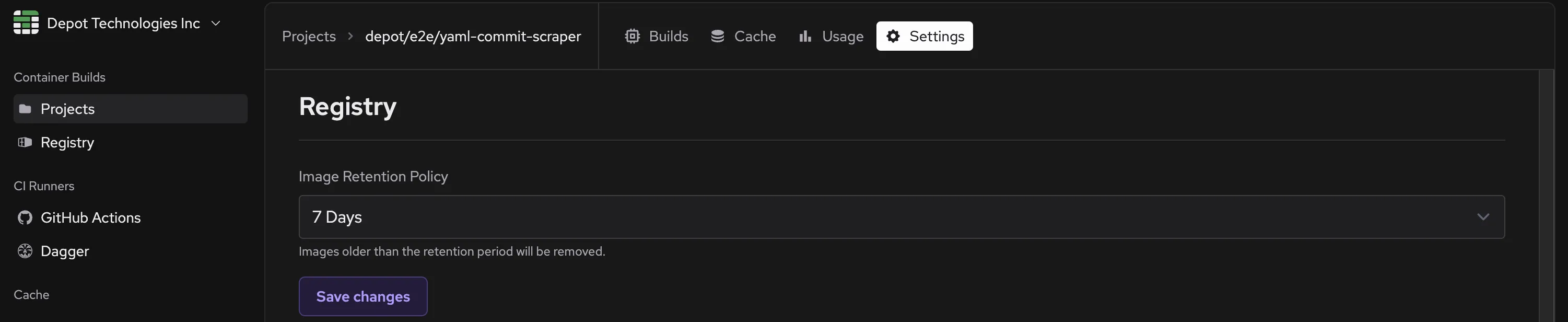

## Cache Retention

Depot Cache retains build artifacts for a configurable amount of time. By default, artifacts are retained for 14 days. You can configure this retention period in the Depot Cache settings.

## Bazel

---

title: Bazel

ogTitle: Remote caching for Bazel builds

description: Learn how to use Depot remote caching for Bazel builds

---

[**Bazel**](https://bazel.build/) is a build tool that builds code quickly and reliably. It is used by many large projects, including Google, and is optimized for incremental builds with advanced local and remote caching and parallel execution. Bazel supports many different languages and platforms, and is highly configurable, scaling to codebases of any size.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with Bazel, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring Bazel to use Depot Cache

Depot Cache can be used with Bazel from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Bazel - each runner is launched with a `$HOME/.bazelrc` file that is pre-populated with the connection details for Depot Cache.

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure Bazel to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure Bazel to use Depot Cache, you will need to set two build flags in your `.bazelrc` file. Configure Bazel to use the Depot Cache service endpoint and set API token as the `authorization` header:

```bash

build --remote_cache=https://cache.depot.dev

build --remote_header=authorization=DEPOT_TOKEN

```

If you are a member of multiple organizations, and you are authenticating with a user token, you must additionally specify which organization to use for cache storage with the `x-depot-org` header:

```bash

build --remote_header=x-depot-org=DEPOT_ORG_ID

```

## Using Depot Cache with Bazel

Once Bazel is configured to use Depot Cache, you can then run your builds as you normally would. Bazel will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## Go Cache

---

title: Go Cache

ogTitle: Remote caching for Go builds and tests

description: Learn how to use Depot remote caching for Go

---

## Configuring Go to use Depot Cache

Depot Cache can be used with Go from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Go - each runner is launched with the `GOCACHEPROG` environment variable pre-populated with the connection details for Depot Cache.

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure `GOCACHEPROG` to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure Go to use Depot Cache, set the `GOCACHEPROG` in your environment:

```shell

export GOCACHEPROG="depot gocache"

```

The `depot` CLI will need to have [authorization](/docs/cli/authentication) to write to the cache.

If you are a member of multiple organizations, and you are authenticating with a user token, you must instead specify which organization should be used for cache storage as follows:

```shell

export GOCACHEPROG='depot gocache --organization ORG_ID'

```

To clean the cache, you can use the typical `go clean` workflow:

```shell

go clean -cache

```

To set verbose output, add the --verbose option:

```shell

export GOCACHEPROG='depot gocache --verbose'

```

## Using Depot Cache with Go

Once Go is configured to use Depot Cache, you can then run your builds as you normally would. Go will automatically communicate with `GOCACHEPROG` to fetch from Depot Cache and reuse any stored build artifacts from your previous builds.

## Gradle

---

title: Gradle

ogTitle: Remote caching for Gradle builds

description: Learn how to use Depot remote caching for Gradle builds

---

[**Gradle**](https://gradle.org/) is the build tool of choice for Java, Android, and Kotlin. It is used in many large projects, including Android itself, and is optimized for incremental builds, advanced local and remote caching, and parallel execution. Gradle supports many different languages and platforms, and is highly configurable, scaling to codebases of any size.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with Gradle, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring Gradle to use Depot Cache

Depot Cache can be used with Gradle from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Gradle - each runner is launched with an `init.gradle` file that is pre-populated with the connection details for Depot Cache. You will need to verify that caching is enabled in your `gradle.properties` file.

```properties

org.gradle.caching=true

```

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure Gradle to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure Gradle to use Depot Cache, you will need to configure remote caching in your `settings.gradle` file. Configure Gradle to use the Depot Cache service endpoints and set your API token as the `password` credential:

`settings.gradle`:

```groovy

buildCache {

remote(HttpBuildCache) {

url = 'https://cache.depot.dev'

enabled = true

push = true

credentials {

username = ''

password = 'DEPOT_TOKEN'

}

}

}

```

If you are a member of multiple organizations, and you are authenticating with a user token, you must additionally specify which organization ID to use for cache storage in the username:

```groovy

buildCache {

remote(HttpBuildCache) {

url = 'https://cache.depot.dev'

enabled = true

push = true

credentials {

username = 'DEPOT_ORG_ID'

password = 'DEPOT_TOKEN'

}

}

}

```

## Using Depot Cache with Gradle

Once Gradle is configured to use Depot Cache, you can then run your builds as you normally would. Gradle will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## Maven

---

title: Maven

ogTitle: Remote caching for Maven builds

description: Learn how to use Depot remote caching for Maven builds

---

[**Maven**](https://maven.apache.org/) is a build automation and project management tool primarily used for Java projects that helps developers manage dependencies, build processes, and documentation in a centralized way. It follows a convention-over-configuration approach by providing a standard project structure and build lifecycle, allowing teams to quickly begin development without extensive configuration.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with Maven, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring Maven to use Depot Cache

Depot Cache can be used with Maven from Depot's managed GitHub Actions runners, your local machine, or any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Maven - each runner is launched with a `settings.xml` file that is pre-populated with the connection details for Depot Cache. You must verify that remote caching is enabled via the [Maven Build Cache extension](https://maven.apache.org/extensions/maven-build-cache-extension/index.html) in `.mvn/maven-build-cache-config.xml`:

```xml

true

SHA-256

true

https://cache.depot.dev

```

It is important to note that the `id` of your remote cache must be set to `depot-cache` for the Depot Cache service to work correctly in Depot GitHub Actions Runners. The cache will not be used if you use a different ID.

You should also verify that you have registered the Build Cache extension in your `pom.xml` file:

```xml

org.apache.maven.extensions

maven-build-cache-extension

1.0.1

```

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure Maven to use Depot Cache, as described below.

### From your local machine or any CI/CD system

To manually configure Maven to use Depot Cache, you will need to configure remote caching in your `~/.m2/settings.xml` file. Configure Maven to use the Depot Cache service endpoints and set your API token where there is the `DEPOT_TOKEN` below:

`settings.xml`:

```xml

depot-cache

Authorization

Bearer DEPOT_TOKEN

```

**Note: Maven support currently only supports Depot Organization API tokens, not user tokens.**

## Using Depot Cache with Maven

Once Maven is configured to use Depot Cache, you can run your builds as usual. Maven will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## moonrepo

---

title: moonrepo

ogTitle: Remote caching for moonrepo builds

description: Learn how to use Depot remote caching for moonrepo builds

---

[**moonrepo**](https://moonrepo.dev/) is a repository management, organization, orchestration, and notification tool for the web ecosystem, written in Rust. Many of the concepts within moon are heavily inspired from Bazel and other popular build systems.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with moonrepo, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring moonrepo to use Depot Cache

Depot Cache can be used with moonrepo from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

To configure `moon` to use Depot Cache, you will need to set a `DEPOT_TOKEN` environment variable with an organization or user token and add the following to your `.moon/workspace.yml` file:

```yaml

unstable_remote:

host: 'grpcs://cache.depot.dev'

auth:

token: 'DEPOT_TOKEN'

```

If you are using a user token and are a member of more than one organization, you will additionally need to set an `X-Depot-Org` header to your Depot organization ID in `.moon/workspace.yml`:

```yaml

unstable_remote:

host: 'grpcs://cache.depot.dev'

auth:

token: 'DEPOT_TOKEN'

headers:

'X-Depot-Org': ''

```

See [moonrepo's remote cache documentation](https://moonrepo.dev/docs/guides/remote-cache#cloud-hosted-depot) for more details.

## Using Depot Cache with moonrepo

Once moonrepo is configured to use Depot Cache, you can then run your builds as you normally would. moonrepo will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## Pants

---

title: Pants

ogTitle: Remote caching for Pants builds

description: Learn how to use Depot remote caching for Pants builds

---

[**Pants**](https://www.pantsbuild.org/) is an ergonomic build tool for codebases of all sizes and supports Python, Go, Java, Scala, Kotlin, Shell, and Docker. It is used in many large projects, including Coinbase, IBM, and Slack, and is optimized for fine-grained incremental builds with advanced local and remote cachin. Pants is highly configurable and can scale to codebases of any size.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with Pants, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring Pants to use Depot Cache

Depot Cache can be used with Pants from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Pants - each runner is launched with a `pants.toml` file that is pre-configured with the connection details for Depot Cache.

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure Pants to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure Pants to use Depot Cache, you will need to enable remote caching in your `pants.toml`. Configure Pants to use the Depot Cache service endpoints and set your API token in the `Authorization` header:

`pants.toml`:

```toml

[GLOBAL]

# Enable remote caching

remote_cache_read = true

remote_cache_write = true

# Point remote caching to Depot Cache

remote_store_headers = { "Authorization" = "DEPOT_TOKEN" }

remote_store_address = "grpcs://cache.depot.dev"

```

If you are a member of multiple organizations, and you are authenticating with a user token, you must additionally specify which organization to use for cache storage using the `x-depot-org` header:

```toml

remote_store_headers = { "x-depot-org" = "DEPOT_ORG_ID" }

```

## Using Depot Cache with Pants

Once Pants is configured to use Depot Cache, you can then run your builds as you normally would. Pants will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## sccache

---

title: sccache

ogTitle: Remote caching for sccache builds

description: Learn how to use Depot remote caching for sccache builds

---

[**sccache**](https://github.com/mozilla/sccache) is a ccache-like compiler caching tool that was created by Mozilla. It is a compiler wrapper that avoids compilation when possible and stores cached results locally or in remote storage. It supports caching the compilation of several languages including C, C++, and Rust. sccache is used in many large projects, including Firefox, and is optimized for incremental builds and advanced local and remote caching.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with sccache, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring sccache to use Depot Cache

Depot Cache can be used with sccache from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with sccache - each runner is launched with a `SCCACHE_WEBDAV_ENDPOINT` environment variable and is pre-configured with the connection details for Depot Cache.

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure sccache to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure sccache to use Depot Cache, you will need to set two environment variables in your environment, representing the Depot Cache service endpoint and your API token:

```shell

export SCCACHE_WEBDAV_ENDPOINT=https://cache.depot.dev

export SCCACHE_WEBDAV_TOKEN=DEPOT_TOKEN

```

If you are a member of multiple organizations, and you are authenticating with a user token, you must instead specify a password along with which organization should be used for cache storage as follows:

```shell

export SCCACHE_WEBDAV_ENDPOINT=https://cache.depot.dev

export SCCACHE_WEBDAV_USERNAME=DEPOT_ORG_ID

export SCCACHE_WEBDAV_PASSWORD=DEPOT_TOKEN

```

## Using Depot Cache with sccache

Once sccache is configured to use Depot Cache, you can then run your builds as you normally would. sccache will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## Turborepo

---

title: Turborepo

ogTitle: Remote caching for Turborepo builds

description: Learn how to use Depot remote caching for Turborepo builds

---

[**Turborepo**](https://turbo.build/) is a high-performance build system for JavaScript and TypeScript codebases, and is designed around scaling build performance for large monorepos. It is used by large projects at Netflix, AWS, and Disney, and supports incremental builds backed by local and remote cache options.

[**Depot Cache**](/docs/cache/overview) provides a remote cache service that can be used with Turborepo, allowing you to incrementally cache and reuse parts of your builds. This cache is accessible from anywhere, both on your local machine and on CI/CD systems.

## Configuring Turborepo to use Depot Cache

Depot Cache can be used with Turborepo from Depot's managed GitHub Actions runners, from your local machine, or from any CI/CD system.

### From Depot-managed Actions runners

[Depot GitHub Actions runners](/docs/github-actions/overview) are pre-configured to use Depot Cache with Turborepo - each runner is launched with a `TURBO_API` environment variable and is pre-configured with the connection details for Depot Cache.

If this automatic configuration is incompatible with your specific setup, you can disable automatic configuration in your organization settings page and manually configure Turborepo to use Depot Cache as described below.

### From your local machine or any CI/CD system

To manually configure Turborepo to use Depot Cache, you will need to set three environment variables in your environment. These represent the Depot Cache service endpoint, your API token, and your Depot organization id:

```shell

export TURBO_API=https://cache.depot.dev

export TURBO_TOKEN=DEPOT_TOKEN

export TURBO_TEAM=DEPOT_ORG_ID

```

## Using Depot Cache with Turborepo

Once Turborepo is configured to use Depot Cache, you can then run your builds as you normally would. Turborepo will automatically communicate with Depot Cache to fetch and reuse any stored build artifacts from your previous builds.

## Authentication

---

title: Authentication

ogTitle: Options for authenticating builds with the Depot CLI

description: We provide three different methods you can use to authenticate your container image builds.

---

We provide three different options you can use to authenticate your build to our remote Docker builders via the `depot` CLI.

## User access tokens

You can generate an access token tied to your Depot account that can be used for builds in any project in any organization you have access. When you run `depot login` we authenticate your account and generate a new user access token that all builds from your machine use by default. It is recommended to only use these for local development and not in CI environments.

To generate a user access token, you can go through the following steps:

1. Open your [Account Settings](/settings)

2. Enter a description for your token under API Tokens

3. Click Create token

## Project tokens

Unlike user access tokens, project tokens are tied to a specific project in your organization and not a user account. These are ideal for building images with Depot from your existing CI provider. They are not tied to a single user account and are restricted to a single project in a single organization.

To generate a project token, you can go through the following steps:

1. Open your Project Details page by clicking on a project from your projects list

2. Click the Settings button next to your project ID

3. Enter a token description and click create token

## OIDC trust relationships

If you use GitHub Actions, CircleCI, or Buildkite as your CI provider, we can directly integrate with [GitHub Actions OIDC](https://docs.github.com/en/actions/deployment/security-hardening-your-deployments/about-security-hardening-with-openid-connect), [CircleCI OIDC](https://circleci.com/docs/openid-connect-tokens/), [Buildkite OIDC](https://buildkite.com/docs/agent/v3/cli-oidc), or [Mint](https://www.rwx.com/mint) via trust relationships. This token exchange is a great way to plug Depot into your existing Actions workflows, CircleCI jobs, or Buildkite pipelines, as it requires no static secrets, and credentials are short-lived.

You configure a trust relationship in Depot that allows your GitHub Actions workflows, CircleCI jobs, or Buildkite pipelines to access your project via a token exchange. The CI job requests an access token from Depot, and we check the request details to see if they match a configured trust relationship for your project. If everything matches, we generate a temporary access token and return it to the job. This temporary access token is only valid for the duration of the job that requested it.

### Adding a trust relationship for GitHub Actions

To add a trust relationship for GitHub Actions, you can go through the following steps:

1. Open your Project Details page by clicking on a project from your projects list

2. Click the Settings button next to your project ID

3. Click the Add trust relationship button

4. Select GitHub as the provider

5. Enter a GitHub User or Organization for the trust relationship

6. Enter the name of the GitHub repository that will build images via Depot (Note: this is the repository name, not the full URL and it must match the repository name exactly)

7. Click Add trust relationship

8. Ensure your workflow has permission to use this OIDC trust relationship by setting the permission `id-token: write`.

### Adding a trust relationship for CircleCI

To add a trust relationship for CircleCI, you can go through the following steps:

1. Open your Project Details page by clicking on a project from your projects list

2. Click the Settings button next to your project ID

3. Click the Add trust relationship button

4. Select CircleCI as the provider

5. Enter your CircleCI organization UUID (this is found in your CircleCI organization settings)

6. Enter your CircleCI project UUID (this is found in your CircleCI project settings)

7. Click Add trust relationship

**Note:** CircleCI requires entering your organization and project UUID, _not_ the friendly name of your organization or project.

### Adding a trust relationship for Buildkite

To add a trust relationship for Buildkite, you can go through the following steps:

1. Open your Project Details page by clicking on a project from your projects list

2. Click the Settings button next to your project ID

3. Click the Add trust relationship button

4. Select Buildkite as the provider

5. Enter the organization slug (i.e., `buildkite.com/`)

6. Enter the pipeline organization slug (i.e., `buildkite.com//`)

7. Click Add trust relationship

### Adding a trust relationship for Mint

To add a trust relationship for Mint, you can go through the following steps:

1. Open your Project Details page by clicking on a project from your projects list

2. Click the Settings button next to your project ID

3. Click the Add trust relationship button

4. Select Mint as the provider

5. Enter your Mint Vault subject you configured [here](https://www.rwx.com/docs/mint/oidc-depot#configure-depot-in-mint)

6. Click Add trust relationship

## Depot CLI Installation

---

title: Depot CLI Installation

ogTitle: Install the Depot CLI

description: Install our Depot CLI, a drop-in replacement for docker build.

---

How to install the `depot` CLI on all platforms, with links to CI configuration guides.

## Mac

For Mac, you can install the CLI with Homebrew:

```shell

brew install depot/tap/depot

```

Or download the latest version from [GitHub releases](https://github.com/depot/cli/releases).

## Linux

Either install with [our installation script](https://depot.dev/install-cli.sh):

```shell

# Install the latest version

curl -L https://depot.dev/install-cli.sh | sh

# Install a specific version

curl -L https://depot.dev/install-cli.sh | sh -s x.y.z

```

Or download the latest version from [GitHub releases](https://github.com/depot/cli/releases).

## CLI Reference

---

title: CLI Reference

ogTitle: Depot CLI Reference

description: A reference for the `depot` CLI, including all config, commands, flags, and options.

---

Below is a reference to the `depot` CLI, including all config, commands, flags, and options. To submit an issue or features please see our CLI repo over on [GitHub](https://github.com/depot/cli).

## Specifying a Depot project

Some commands need to know which [project](/docs/core-concepts#projects) to route the build to.

For interactive terminals calling [`build`](#depot-build) or [`bake`](#depot-bake), if don't specify a project, you will be prompted to choose a project when using an interactive prompt and given the option to save that project for future use in a `depot.json` file.

Alternatively, you can specify the Depot project for any command using any of the following methods:

1. Use the `--project` flag with the ID of the project you want to use

2. Set the `DEPOT_PROJECT_ID` environment variable to the ID of the project you want to use

## Authentication

The Depot CLI supports different authentication mechanisms based on where you're running your build, you can read more about them in our [authentication docs](/docs/cli/authentication).

### Local builds with the CLI

For the CLI running locally, you can use the `depot login` command to authenticate with your Depot account, and the `depot logout` command to log out. This will generate a [user token](/docs/cli/authentication#user-access-tokens) and store it on your local machine. We recommended only using this option when running builds locally.

### Build with the CLI in a CI environment

When using the CLI in a CI environment like GitHub Actions, we recommended configuring your workflows to leverage our [OIDC trust relationships](/docs/cli/authentication#oidc-trust-relationships). These prevent the need to store user tokens in your CI environment and allow you to authenticate with Depot using your CI provider's identity.

For CI providers that don't support OIDC, we recommended configuring your CI environment to use a [project token](/docs/cli/authentication#project-tokens).

### The `--token` flag

A variety of Depot CLI calls accept a `--token` flag, which allows you to specify a **user or project token** to use for the command. If no token is specified, the CLI will attempt to use the token stored on your local machine or look for an environment variable called `DEPOT_TOKEN`.

## Commands

### `depot bake`

The `bake` command allows you to define all of your build targets in a central file, either HCL, JSON, or Compose. You can then pass that file to the `bake` command and Depot will build all of the target images with all of their options (i.e. platforms, tags, build arguments, etc.).

By default, `depot bake` will leave the built image in the remote builder cache. If you would like to download the image to your local Docker daemon (for instance, to `docker run` the result), you can use the `--load` flag. In some cases it is more efficient to load from the registry, so this may result in the build getting saved to the Depot Registry.

Alternatively, to push the image to a remote registry directly from the builder instance, you can use the `--push` flag.

**Example**

An example `docker-bake.hcl` file:

```hcl

group "default" {

targets = ["original", "db"]

}

target "original" {

dockerfile = "Dockerfile"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["example/app:test"]

}

target "db" {

dockerfile = "Dockerfile.db"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["example/db:test"]

}

```

To build all of the images we just need to call `bake`:

```shell

depot bake -f docker-bake.hcl

```

If you want to build different targets in the bake file with different Depot projects, you can specify the `project_id` in the `target` block:

```hcl

group "default" {

targets = ["original", "db"]

}

target "original" {

dockerfile = "Dockerfile"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["example/app:test"]

project_id = "project-id-1"

}

target "db" {

dockerfile = "Dockerfile.db"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["example/db:test"]

project_id = "project-id-2"

}

```

If you want to build a specific target in the bake file, you can specify it in the `bake` command:

```shell

depot bake -f docker-bake.hcl original

```

You can also save all of the targets built in a bake or compose file to the [Depot Registry](/docs/registry/overview) for later use with the `--save` flag:

```shell

depot bake -f docker-bake.hcl --save

```

#### Docker Compose support

Depot supports using bake to build [Docker Compose](/blog/depot-with-docker-compose) files.

To use `depot bake` with a Docker Compose file, you can specify the file with the `-f` flag:

```shell

depot bake -f docker-compose.yml

```

Compose files have special extensions prefixed with `x-` to give additional information to the build process.

In this example, the `x-bake` extension is used to specify the tags for each service and the `x-depot` extension is used to specify different project IDs for each.

```yaml

services:

mydb:

build:

dockerfile: ./Dockerfile.db

x-bake:

tags:

- ghcr.io/myorg/mydb:latest

- ghcr.io/myorg/mydb:v1.0.0

x-depot:

project-id: 1234567890

myapp:

build:

dockerfile: ./Dockerfile.app

x-bake:

tags:

- ghcr.io/myorg/myapp:latest

- ghcr.io/myorg/myapp:v1.0.0

x-depot:

project-id: 9876543210

```

#### Flags for `bake`

This command accepts all the command line flags as Docker's `docker buildx bake` command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `build-platform` | Run builds on this platform ("dynamic", "linux/amd64", "linux/arm64") (default "dynamic") |

| `file` | Build definition file |

| `help` | Show the help doc for `bake` |

| `lint` | Lint Dockerfiles of targets before the build |

| `lint-fail-on` | Set the lint severity that fails the build ("info", "warn", "error", "none") (default "error") |

| `load` | Shorthand for "--set=\*.output=type=docker" |

| `metadata-file` | Write build result metadata to the file |

| `no-cache` | Do not use cache when building the image |

| `print` | Print the options without building |

| `progress` | Set type of progress output ("auto", "plain", "tty"). Use plain to show container output (default "auto") |

| `project` | Depot project ID |

| `provenance` | Shorthand for "--set=\*.attest=type=provenance" |

| `pull` | Always attempt to pull all referenced images |

| `push` | Shorthand for "--set=\*.output=type=registry" |

| `save` | Saves the build to the Depot Registry

| `save-tag` | Saves the tag prepended to each target to the Depot Registry |

| `sbom` | Shorthand for "--set=\*.attest=type=sbom" |

| `sbom-dir` | Directory to store SBOM attestations |

| `set` | Override target value (e.g., "targetpattern.key=value") |

| `token` | Depot token ([authentication docs](/docs/cli/authentication)) |

{/* */}

### `depot build`

Runs a Docker build using Depot's remote builder infrastructure.

By default, `depot build` will leave the built image in the remote builder cache. If you would like to download the image to your local Docker daemon (for instance, to `docker run` the result), you can use the `--load` flag. In some cases it is more efficient to load from the registry, so this may result in the build getting saved to the Depot Registry.

Alternatively, to push the image to a remote registry directly from the builder instance, you can use the `--push` flag.

**Example**

```shell

# Build remotely

depot build -t repo/image:tag .

```

```shell

# Build remotely, download the container locally

depot build -t repo/image:tag . --load

```

```shell

# Lint your dockerfile

depot build -t repo/image:tag . --lint

```

```shell

# Build remotely, push to a registry

depot build -t repo/image:tag . --push

```

#### Flags for `build`

This command accepts all the command line flags as Docker's `docker buildx build` command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `add-host` | Add a custom host-to-IP mapping (format: "host:ip") |

| `allow` | Allow extra privileged entitlement (e.g., "network.host", "security.insecure") |

| `attest` | Attestation parameters (format: "type=sbom,generator=image") |

| `build-arg` | Set build-time variables |

| `build-context` | Additional build contexts (e.g., name=path) |

| `build-platform` | Run builds on this platform ("dynamic", "linux/amd64", "linux/arm64") (default "dynamic") |

| `cache-from` | External cache sources (e.g., "user/app:cache", "type=local,src=path/to/dir") |

| `cache-to` | Cache export destinations (e.g., "user/app:cache", "type=local,dest=path/to/dir") |

| `cgroup-parent` | Optional parent cgroup for the container |

| `file` | Name of the Dockerfile (default: "PATH/Dockerfile") |

| `help` | Show help doc for `build` |

| `iidfile` | Write the image ID to the file |

| `label` | Set metadata for an image |

| `lint` | Lint Dockerfile before the build |

| `lint-fail-on` | Set the lint severity that fails the build ("info", "warn", "error", "none") (default "error") |

| `load` | Shorthand for "--output=type=docker" |

| `metadata-file` | Write build result metadata to the file |

| `network` | Set the networking mode for the "RUN" instructions during build (default "default") |

| `no-cache` | Do not use cache when building the image |

| `no-cache-filter` | Do not cache specified stages |

| `output` | Output destination (format: "type=local,dest=path") |

| `platform` | Set target platform for build |

| `progress` | Set type of progress output ("auto", "plain", "tty"). Use plain to show container output (default "auto") |

| `project` | Depot project ID |

| `provenance` | Shortand for "--attest=type=provenance" |

| `pull` | Always attempt to pull all referenced images |

| `push` | Shorthand for "--output=type=registry" |

| `quiet` | Suppress the build output and print image ID on success |

| `save` | Saves the build to the Depot Registry |

| `save-tag` | Saves the tag provided to the Depot Registry |

| `sbom` | Shorthand for "--attest=type=sbom" |

| `sbom-dir` | Directory to store SBOM attestations |

| `secret` | Secret to expose to the build (format: "id=mysecret[,src=/local/secret]") |

| `shm-size` | Size of "/dev/shm" |

| `ssh` | SSH agent socket or keys to expose to the build |

| `tag` | Name and optionally a tag (format: "name:tag") |

| `target` | Set the target build stage to build |

| `token` | Depot token |

| `ulimit` | Ulimit options (default []) |

{/* */}

### `depot cache`

Interact with the cache associated with a Depot project. The `cache` command consists of subcommands for each operation.

#### `depot cache reset`

Reset the cache of the Depot project to force a new empty cache volume to be created.

**Example**

Reset the cache of the current project ID in the root `depot.json`

```shell

depot cache reset .

```

Reset the cache of a specific project ID

```shell

depot cache reset --project 12345678910

```

### `depot gocache`

Configure Go tools to use Depot Cache. The Go tools will use the remote cache service to store and retrieve build artifacts.

_Note: This requires Go 1.24 or later._

Set the environment variable `GOCACHEPROG` to `depot gocache` to configure Go to use Depot Cache.

```shell

export GOCACHEPROG='depot gocache'

```

Next, run your Go build commands as usual.

```shell

go build ./...

```

To set verbose output, add the --verbose option:

```shell

export GOCACHEPROG='depot gocache --verbose'

```

To clean the cache, you can use the typical `go clean` workflow:

```shell

go clean -cache

```

If you are in multiple Depot organizations and want to specify the organization, you can use the `--organization` flag.

```shell

export GOCACHEPROG='depot gocache --organization ORG_ID'

```

### `depot configure-docker`

Configure Docker to use Depot's remote builder infrastructure. This command installs Depot as a Docker CLI plugin (i.e., `docker depot ...`), sets the Depot plugin as the default Docker builder (i.e., `docker build`), and activates a buildx driver (i.e. `docker buildx buildx ...`).

```shell

depot configure-docker

```

If you want to uninstall the plugin, you can specify the `--uninstall` flag.

```shell

depot configure-docker --uninstall

```

### `depot list`

Interact with Depot builds.

### `depot list builds`

Display the latest Depot builds for a project. By default the command runs an interactive listing of depot builds showing status and build duration.

To exit type `q` or `ctrl+c`

**Example**

List builds for the project in the current directory.

```shell

depot list builds

```

**Example**

List builds for a specific project ID

```shell

depot list builds --project 12345678910

```

**Example**

The list command can output build information to stdout with the `--output` option. It supports `json` and `csv`.

Output builds in JSON for the project in the current directory.

```shell

depot list builds --output json

```

### `depot init`

Initialize an existing Depot project in the current directory. The CLI will display an interactive list of your Depot projects for you to choose from, then write a `depot.json` file in the current directory with the contents `{"id": "PROJECT_ID"}`.

**Example**

```shell

depot init

```

### `depot login`

Authenticates with your Depot account, automatically creating and storing a user token on your local machine.

**Examples**

```shell

# Login and select organization interactively

$ depot login

# Login and specify organization ID

$ depot login --org-id 1234567890

# Clear existing token before logging in

$ depot login --clear

```

### `depot logout`

Logout out of your Depot account, removing your user token from your local machine.

**Example**

```shell

depot logout

```

### `depot projects create`

Create a new project in your Depot organization.

```shell

depot projects create "your-project-name"

```

Projects will be created with the default region `us-east-1` and cache storage policy of 50 GB per architecture. You can specify a different region and cache storage policy using the `--region` and `--cache-storage-policy` flags.

```shell

depot projects create --region eu-central-1 --cache-storage-policy 100 "your-project-name"

```

If you are in more than one organization, you can specify the ID of the organization you want the project to be created in using the `--organization` flag.

```shell

depot projects create ---organization 12345678910 "your-project-name"

```

#### Flags for `create`

Additional flags that can be used with this command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `platform` | Pulls image for specific platform ("linux/amd64", "linux/arm64") |

| `organization` | Depot organization ID |

| `region` | Build data will be stored in the chosen region (default "us-east-1") |

| `cache-storage-policy` | Build cache to keep per architecture in GB (default 50) |

| `token` | Depot token |

{/* */}

### `depot projects list`

Display an interactive listing of current Depot projects. Selecting a specific project will display the latest builds.

To return from the latest builds to projects, press `ESC`.

To exit type `q` or `ctrl+c`

**Example**

```shell

depot list projects

```

### `depot pull`

Pull an image from the Depot Registry by build ID in a project.

**Example**

```shell

depot pull --project

```

You can also specify the tag to assign to the image using the `-t` flag.

**Example**

```shell

depot pull --project -t :

```

There is also the option to pull an image for a specific platform.

```shell

depot pull --project --platform linux/arm64

```

#### Flags for `pull`

Additional flags that can be used with this command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `platform` | Pulls image for specific platform ("linux/amd64", "linux/arm64") |

| `progress` | Set type of progress output ("auto", "plain", "tty", "quiet") (default "auto") |

| `project` | Depot project ID |

| `tag` | Optional tags to apply to the image |

| `token` | Depot token |

{/* */}

### `depot pull-token`

Generate a short-lived token to pull an image from the Depot Registry.

**Example**

```shell

depot pull-token --project

```

You can also specify a build ID to generate a token for a specific build.

**Example**

```shell

depot pull-token --project

```

#### Flags for `pull-token`

Additional flags that can be used with this command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `project` | Depot project ID |

| `token` | Depot token |

{/* */}

### `depot push`

Push an image from the Depot Registry to another registry. It uses registry credentials stored in Docker when pushing to registries. If you have not already authenticated with your registry, you should do so with `docker login` before running `depot push`.

Alternatively, you can specify the environment variables `DEPOT_PUSH_REGISTRY_USERNAME` and `DEPOT_PUSH_REGISTRY_PASSWORD`

for the registry credentials. This allows you to skip the `docker login` step.

**Example**

```shell

depot push --project

```

You can also specify the tag to assign to the image that is being pushed by using the `-t` flag.

**Example**

```shell

depot push --project -t :

```

#### Flags for `push`

Additional flags that can be used with this command.

{/* */}

| Name | Description |

| ---- | ----------- |

| `progress` | Set type of progress output ("auto", "plain", "tty", "quiet") (default "auto") |

| `project` | Depot project ID |

| `tag` | Optional tags to apply to the image |

| `token` | Depot token |

{/* */}

### `depot org`

Manage organizations you have access to in Depot. The `org` command group provides tools to list, switch, and show your current organization context.

#### `depot org list`

List organizations that you can access. By default, this command opens an interactive table. You can also output the list in `json` or `csv` format for scripting.

**Usage**

```shell

depot org list

```

#### `depot org switch`

Set the current organization in your global Depot settings. This affects which organization is used by default for commands that support organization context.

**Usage**

```shell

depot org switch [org-id]

```

If you do not provide an `org-id`, you will be prompted to select one interactively.

**Examples**

```shell

# Switch to a specific organization by ID

$ depot org switch 1234567890

# Select organization interactively

$ depot org switch

```

#### `depot org show`

Show the current organization set in your global Depot settings.

**Usage**

```shell

depot org show

```

**Example**

```shell

$ depot org show

1234567890

```

## Docker Arm images

---

title: Docker Arm images

ogTitle: Building native Docker Arm images with Depot

description: Build native Docker Arm images or multi-platform Docker images without emulation.

---

## Docker Arm images with Depot

Building Docker images for Arm via `docker build` from a host architecture running an Intel chip is forced to use QEMU emulation to build Docker Arm images. It's also only possible to build multi-platform Docker images using emulation or running your own BuildKit builders.

Depot removes emulation altogether.

Depot is a remote Docker container build service that orchestrates optimized BuildKit builders on native CPUs for Intel (x86) and Arm (arm64).

When a Docker image build is routed to Depot either via [`depot build`](/docs/cli/reference#depot-build) or [`docker build`](/docs/container-builds/how-to-guides/docker-build#how-to-use-depot-with-docker), we launch optimized builders for each architecture requested with a persistent layer cache attached to them. Each image builder, by default, has 16 CPUs and 32GB of memory. If you're on a startup or business plan, you can configure your builders to be larger, with up to 64 CPUs and 128 GB of memory. Each builder also has a fast NVMe SSD with at least 50GB for layer caching.

## How to build Docker images for Arm CPUs like Apple Silicon or AWS Graviton

With `depot build` or `docker build` configured to use Depot, it automatically detects the architecture you're building for and routes the build to the appropriate builder.

So, if you're building a Docker image from a macOS device running Apple Silicon (M1, M2, M3, M4), there is nothing extra you need to do. We will detect the architecture and route the build to an Arm builder.

```shell

depot build .

```

If you're building a Docker image from an Intel machine, like a CI provider, you can specify `--platform linux/arm64` to build a Docker Arm image.

```shell

docker build --platform linux/arm64 .

```

We have integration guides for most of the CI providers:

- [Bitbucket Pipelines](/docs/container-builds/reference/bitbucket-pipelines)

- [Buildkite](/docs/container-builds/reference/buildkite)

- [CircleCI](/docs/container-builds/reference/circleci)

- [GitHub Actions](/docs/container-builds/reference/github-actions)

- [GitLab CI](/docs/container-builds/reference/gitlab-ci)

- [Google Cloud Build](/docs/container-builds/reference/google-cloud-build)

- [Jenkins](/docs/container-builds/reference/jenkins)

- [Travis CI](/docs/container-builds/reference/travis-ci)

## How to build multi-platform Docker images

With Depot, we can launch multiple builders in parallel to build multi-platform Docker images concurrently. To build a multi-platform Docker image for both Intel & Arm, we can specify `--platform linux/amd64,linux/arm64` to `depot build` or `docker build`.

```shell

depot build --platform linux/amd64,linux/arm64 .

```

### Loading a multi-platform Docker image via `--load`

If you want to load a multi-platform Docker image into your local Docker daemon, you will hit an error when using `docker buildx build --load`:

```shell

docker exporter does not currently support exporting manifest lists

```

This is because the default behavior of load does not support loading multi-platform Docker images. To get around this, you can use [`depot build --load`](/docs/cli/reference#depot-build) instead where we have made load faster & more intelligent.

```shell

depot build --platform linux/amd64,linux/arm64 --load .

```

## Build autoscaling

---

title: Build autoscaling

description: How to enable and configure container build autoscaling to parallelize builds across multiple builders

---

import {ImageWithCaption} from '~/components/Image'

Container build autoscaling allows you to automatically scale out your builds to multiple BuildKit builders based on the number of concurrent builds you want to process on a single builder. This feature is available on all Depot plans and can significantly speed up your container builds when you have multiple concurrent builds or resource-intensive builds.

## How build autoscaling works

By default, all builds for a project are routed to a single BuildKit host per architecture you're building. Each BuildKit builder can process multiple jobs concurrently on the same host, which enables deduplication of work across builds that share similar steps and layers.

With build autoscaling enabled, Depot will automatically spin up additional BuildKit builders when the concurrent build limit is reached. Here's how the process works:

1. You run `depot build`, which informs our control plane that you'd like to run a container build

2. The control plane checks your autoscaling configuration to determine the maximum concurrent builds per builder

3. If the current builder is at capacity, the provisioning system spins up additional BuildKit builders

4. Each additional builder operates on a clone of the main builder's layer cache

5. The `depot build` command connects directly to an available builder to run the build

## When to use build autoscaling

Build autoscaling is particularly useful in these scenarios:

- **High concurrent build volume**: When you have many builds running simultaneously that consume all resources of a single builder

- **Resource-intensive builds**: When individual builds require significant CPU, memory, or I/O resources

- **Time-sensitive builds**: When you need to reduce build queue times during peak periods

- **CI/CD pipelines with parallel jobs**: When your pipeline triggers multiple builds at once

### When NOT to use build autoscaling

Consider these tradeoffs before enabling autoscaling:

- **Cache efficiency**: Additional builders operate on cache clones that are not written back to the main cache, reducing cache hit rates

- **Deduplication loss**: Builds on different builders cannot share work, even if they have similar layers

- **Small, infrequent builds**: If your builds are small and run infrequently, the overhead may not be worth it

**Recommendation**: Before enabling autoscaling, first try sizing up your container builder. You can select larger builder sizes on our [pricing page](/pricing), which allows you to run larger builds on a single builder without needing to scale out.

## How to enable build autoscaling

To enable container build autoscaling:

1. Navigate to your Depot project settings

2. Go to the **Settings** tab

3. Find the **Build autoscaling** section

4. Toggle **Enable horizontal autoscaling**

5. Set the **Maximum concurrent builds per builder** (default is 1)

6. Click **Save changes**

The concurrent builds setting determines how many builds can run on a single builder before triggering a scale-out event. For example:

- Setting it to `1` means each build gets its own dedicated builder

- Setting it to `3` means up to 3 builds can share a builder before a new one is launched

## Cache behavior with autoscaling

Understanding cache behavior is crucial when using autoscaling:

### Cache cloning

When additional builders are launched due to autoscaling:

1. They receive a **read-only clone** of the main builder's layer cache

2. New layers built on scaled builders are stored locally but **not persisted** back to the main cache

3. When the scaled builder terminates, its local cache changes are lost

### Cache implications

This means:

- Builds on scaled builders can read from the main cache

- They cannot contribute new layers back to the main cache

- Subsequent builds may need to rebuild layers that were already built on scaled builders

- Cache efficiency may decrease with heavy autoscaling usage

## Billing and costs

Build autoscaling is available on **all Depot plans** at no additional cost:

- **No extra charges**: Autoscaling itself doesn't incur additional fees

- **Standard compute rates**: You pay the same per-minute rate for scaled builders as regular builders

- **No cache storage charges**: Cache clones are temporary and don't count toward your storage quota

- **Pay for what you use**: Scaled builders are terminated when not in use

## Best practices

1. **Monitor your builds**: Use Depot's build insights to understand your build patterns before enabling autoscaling

2. **Start conservative**: Begin with a higher concurrent build limit and decrease if needed

3. **Size up first**: Consider using larger builder sizes before enabling autoscaling

4. **Review cache hit rates**: Monitor if autoscaling significantly impacts your cache efficiency

5. **Adjust during peak times**: You can dynamically adjust settings based on your build patterns

## Example configuration

Here's an example of when autoscaling might be beneficial:

**Scenario**: Your team has resource-intensive builds that compile large applications with heavy dependencies. Each build requires significant CPU and memory resources, and you frequently have multiple builds running concurrently due to:

- Multiple developers pushing code simultaneously

- CI pipelines that build multiple variants of your application (different environments, architectures, or configurations)

- Monorepo setups where changes trigger builds for multiple services

**Without autoscaling**:

- Multiple resource-intensive builds compete for CPU and memory on a single builder

- Builds experience CPU throttling and memory pressure

- Build times increase dramatically when multiple builds run concurrently

- Builds may fail due to out-of-memory errors when too many run simultaneously

**With autoscaling** (max 1 concurrent build per builder):

- Each resource-intensive build gets its own dedicated builder with full access to 16 CPUs and 32GB RAM

- No resource contention between builds

- Consistent, predictable build times regardless of concurrent load

- Builds can fully utilize available compute resources without interference

**Example build characteristics that benefit from this configuration**:

- Large Docker images with many layers (>50 layers)

- Compilation of languages like Rust, C++, or Go with extensive dependencies

- Machine learning model training or data processing during build

- Multi-stage builds with resource-intensive compilation steps

- Builds that require significant disk I/O for dependency installation

Result: Each build runs with dedicated resources, preventing resource contention and ensuring optimal performance even during peak usage.

## Troubleshooting

If you're experiencing issues with autoscaling:

1. **Builds still queueing**: Verify autoscaling is enabled and check your concurrent build limit

2. **Increased cache misses**: This is expected behavior with cache clones - consider if the speed benefit outweighs cache efficiency

3. **Costs increasing**: Monitor your usage in the Depot dashboard and adjust concurrent limits if needed

For additional help, reach out on [Discord](https://depot.dev/discord) or contact support.

## Continuous Integration

---

title: Continuous Integration

ogTitle: How to use Depot in your existing CI provider

description: Make your container image builds faster in your existing CI by replacing docker build with depot build.

---

## Why use Depot with your CI provider?

Depot provides a remote Docker build service that makes the image build process faster and more intelligent.

By routing the image build step of your CI to Depot, you can complete the image build up to 40x faster than you could in your generic CI provider. Saving you build minutes in your existing CI provider and, more importantly, saving you developer time waiting for the build to finish.

The `depot build` command is a drop-in replacement for `docker build` and `docker buildx build`. Alternatively, you can [configure your local Docker CLI to use Depot as the default builder](/docs/container-builds/how-to-guides/docker-build). Depot launches remote builders for both native Intel & Arm CPUs with, by default, 16 CPUs, 32 GB of memory, and a 50 GB persistent NVMe cache SSD. On a startup or business plan, in your project settings, you can configure your builders to be larger, with up to 64 CPUs and 128 GB of memory.

Running `depot` in a continuous integration environment is a great way to get fast and consistent builds with any CI provider. See below for documentation on integrating Depot with your CI provider.

## Providers

- [AWS CodeBuild](/docs/container-builds/reference/aws-codebuild)

- [Bitbucket Pipelines](/docs/container-builds/reference/bitbucket-pipelines)

- [Buildkite](/docs/container-builds/reference/buildkite)

- [CircleCI](/docs/container-builds/reference/circleci)

- [GitHub Actions](/docs/container-builds/reference/github-actions)

- [GitLab CI](/docs/container-builds/reference/gitlab-ci)

- [Google Cloud Build](/docs/container-builds/reference/google-cloud-build)

- [Jenkins](/docs/container-builds/reference/jenkins)

- [Travis CI](/docs/container-builds/reference/travis-ci)

## Dev Containers

---

title: Dev Containers

ogTitle: How to use Depot with Dev Containers

description: Leverage Depot to build your Dev Containers on demand with our configure-docker command.

---

## Why use Depot with Dev Containers?

[Dev Containers](https://code.visualstudio.com/docs/devcontainers/containers) are becoming a popular way to leverage a container as a fully featured development environment directly integrated with Visual Studio Code. You can open any folder inside a container and use the full power of VS Code inside.

With Depot, you can build your Dev Containers on demand with instant shared caching across your entire team.

## How to use Depot with Dev Containers

First, you will need to make sure you have [installed the `depot` CLI](/docs/container-builds/quickstart#installing-the-cli) and [configured a project](/docs/container-builds/quickstart#creating-a-project).

### Connect to your Depot project from the `depot` CLI

Once the CLI is installed, you can configure your environment:

1. Run `depot login` to login to your Depot account

2. Change into the root of your project directory

3. Run `depot init` to link your project to your repository; this will create a `depot.json` directory in the current directory

**Note: You can also connect `depot` to your project by passing the `DEPOT_PROJECT_ID` environment variable**

### Configure Docker to use Depot

Dev Containers uses the `docker buildx build` command internally to build the container image. You can configure Depot as a plugin for the Docker CLI and Buildx with the following command:

```bash

depot configure-docker

```

The `configure-docker` command is a one-time operation that routes any `docker build` or `docker buildx build` commands to Depot builders.

### Build your Dev Container

There are multiple options for building your Dev Container:

1. You can open an existing folder in VS Code in a container, [see these docs](https://code.visualstudio.com/docs/devcontainers/containers#_quick-start-open-an-existing-folder-in-a-container)

2. You can open a Git repo or Pull Request in an isolated container, [see these docs](https://code.visualstudio.com/docs/devcontainers/containers#_quick-start-open-a-git-repository-or-github-pr-in-an-isolated-container-volume)

3. You can also build your Dev container directly using the [`devcontainer` CLI](https://code.visualstudio.com/docs/devcontainers/devcontainer-cli#_prebuilding):

```bash

devcontainer build --workspace-folder .

[4 ms] @devcontainers/cli 0.50.0. Node.js v20.3.1. darwin 22.5.0 arm64.

[1878 ms] Start: Run: docker buildx build --load --build-arg BUILDKIT_INLINE_CACHE=1 -f /var/folders/w9/8yw9qm955bqcdwphh62w6fvr0000gn/T/devcontainercli/container-features/0.50.0-1690365763237/Dockerfile-with-features -t vsc-example-241be831c2682292f834c48f737ab308a1e901188127c5444a37dd0c0a339c90 --target dev_containers_target_stage --build-arg _DEV_CONTAINERS_BASE_IMAGE=dev_container_auto_added_stage_label /Users/user1/projects/proj/example

[+] Building 3.5s (19/19) FINISHED

=> [depot] build: https://depot.dev/orgs/orgid/projects/projectid/builds/9hh2rh7zkq 0.0s

=> [depot] launching arm64 builder 0.5s

=> [depot] connecting to arm64 builder 0.4s

=> [internal] load .dockerignore 0.4s

=> => transferring context: 116B 0.3s

=> [internal] load build definition from Dockerfile-with-features 0.3s

=> => transferring dockerfile: 601B 0.3s

=> [internal] load metadata for docker.io/library/node:16-alpine 0.4s

=> [build 1/5] FROM docker.io/library/node:16-alpine@sha256:6c381d5dc2a11dcdb693f0301e8587e43f440c90cdb8933eaaaabb905d44cdb9 0.0s

....

```

You should see something similar to the above in your VS Code or `devcontainer` build logs. You can see that the `docker buildx build` command is called, and then you see log lines for `[depot] ...` that confirm your Docker image build is routed to Depot builders.

## Docker Bake

---

title: Docker Bake

ogTitle: How to build multiple Docker images in parallel with Depot bake

description: Learn how to use depot bake to build multiple container images concurrently from HCL, JSON, or Docker Compose files

---

Building multiple Docker images that share common dependencies? Need to build all your services at once? `depot bake` lets you build multiple images in parallel from a single file, dramatically speeding up your builds while taking advantage of shared work between images.

## Why use bake?

Traditional approaches to building multiple images often involve sequential builds using tools like `make` or shell scripts. This means waiting for each image to complete before starting the next one, and rebuilding shared dependencies multiple times.

With `depot bake`, you can:

- Build all images in parallel on dedicated BuildKit builders

- Automatically deduplicate shared work across images

- Define all your builds in a single HCL, JSON, or Docker Compose file

- Get native Intel and Arm builds without emulation

- Leverage persistent caching across all your builds

## How to use depot bake

### Basic usage

By default, `depot bake` looks for these files in your project root:

- `compose.yaml`, `compose.yml`, `docker-compose.yml`, `docker-compose.yaml`

- `docker-bake.json`, `docker-bake.override.json`

- `docker-bake.hcl`, `docker-bake.override.hcl`

Run bake with no arguments to build the default group or all services:

```shell

depot bake

```

### Specifying a bake file

Use the `-f` flag to specify a custom bake file:

```shell

depot bake -f my-bake-file.hcl

```

### Building specific targets

Build only specific targets instead of all:

```shell

depot bake app db

```

## HCL bake file format

HCL is the recommended format for bake files as it provides the most features and flexibility.

### Basic example

```hcl

group "default" {

targets = ["app", "db", "cron"]

}

target "app" {

dockerfile = "Dockerfile.app"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/app:latest"]

}

target "db" {

dockerfile = "Dockerfile.db"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/db:latest"]

}

target "cron" {

dockerfile = "Dockerfile.cron"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/cron:latest"]

}

```

You can think of each `target` as a Docker build command, where you specify the Dockerfile, platforms, and tags for the image. These targets can be grouped together in a `group` to build them all at once.

Our optimized instances of BuildKit will build these images in parallel, automatically deduplicating work across targets.

### Using variables

Make your bake files more flexible with variables:

```hcl

variable "TAG" {

default = "latest"

}

variable "REGISTRY" {

default = "myrepo"

}

target "app" {

dockerfile = "Dockerfile.app"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["${REGISTRY}/app:${TAG}"]

}

```

Override variables from the command line:

```shell

TAG=v1.0.0 REGISTRY=mycompany depot bake

```

### Sharing base images

Use `contexts` to specify dependencies between targets in a bake file. A common use of this is to highlight that targets share a base image, so you can deduplicate work by only building that base image once:

```hcl

target "base" {

dockerfile = "Dockerfile.base"

platforms = ["linux/amd64", "linux/arm64"]

}

target "app" {

contexts = {

base = "target:base"

}

dockerfile = "Dockerfile.app"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/app:latest"]

}

target "worker" {

contexts = {

base = "target:base"

}

dockerfile = "Dockerfile.worker"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/worker:latest"]

}

```

In your Dockerfiles, reference the base context:

```dockerfile

# Dockerfile.app

FROM base

# ... rest of your app Dockerfile

```

### Matrix builds

You can use the matrix key to parameterize a single target to build images for different inputs. This can be helpful if you have a lot of similarities between targets in your bake file.

```hcl

target "service" {

name = "service-${item}"

matrix = {

item = ["frontend", "backend", "api"]

}

dockerfile = "Dockerfile.${item}"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/${item}:latest"]

}

```

**Note: The name property is required when using the matrix property to create the unique image build for each value in the matrix.**

## Docker Compose bake format

You can use your existing Docker Compose files as a bake file. There are limitations compared to HCL, like not supporting `inherits` or variable blocks. But it's a great way to build all of your services in parallel without needing to rewrite your existing Compose files.

```yaml

services:

app:

build:

dockerfile: Dockerfile.app

platforms:

- linux/amd64

- linux/arm64

image: myrepo/app:latest

db:

build:

dockerfile: Dockerfile.db

platforms:

- linux/amd64

- linux/arm64

image: myrepo/db:latest

worker:

build:

dockerfile: Dockerfile.worker

platforms:

- linux/amd64

- linux/arm64

image: myrepo/worker:latest

```

Build all services defined in the Docker Compose file with:

```shell

depot bake -f docker-compose.yml

```

## Advanced features

### Using multiple Depot projects in a bake file

In some cases you may want to shard your container builds out across different Depot projects so you can have the full BuildKit host dedicated to the build. For compose, you can specify different Depot projects per service.

```yaml

services:

frontend:

build:

dockerfile: ./Dockerfile.frontend

x-depot:

project-id: project-id-1

backend:

build:

dockerfile: ./Dockerfile.backend

x-depot:

project-id: project-id-2

```

You can also specify the project ID in HCL for each `target`:

```hcl

target "app" {

dockerfile = "Dockerfile.app"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/app:latest"]

project_id = "project-id-1"

}

target "db" {

dockerfile = "Dockerfile.db"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/db:latest"]

project_id = "project-id-2"

}

target "worker" {

dockerfile = "Dockerfile.worker"

platforms = ["linux/amd64", "linux/arm64"]

tags = ["myrepo/worker:latest"]

project_id = "project-id-3"

}

```

### Loading images locally

Load specific targets to your local Docker daemon by including the target name after the load flag:

```shell

depot bake --load app

```

This only loads the specified target, not all targets in the bake file.

### Using the Depot Registry with bake

You can save built images to the [Depot Registry](/docs/registry/overview) for later use:

```shell

depot bake --save --metadata-file=build.json

```

If you want to specify a specific tag for the images being stored in the registry, you can do so by using the `--save-tag` flag:

```shell

depot bake --save --save-tag myrepo/app:v1.0.0

```

You can pull specific targets out of the Depot Registry later using the [`depot pull`](/docs/cli/reference#depot-pull) command:

```shell

depot pull --project --target app,db

```

Or push to your registry after tests pass:

```shell

depot push --project --target app \

--tag myregistry/app:v1.0.0

```

### Passing build arguments (i.e. `--build-arg`) to a target

You can pass build arguments to your targets in the bake file using the `args` block. This is useful for passing environment variables or other configuration options to your Docker builds.

```hcl

target "app" {

args = {

NODE_VERSION = "18"

ENV = "production"

}

}

```

## GitHub Actions integration

You can use the [`depot/bake-action`](https://github.com/depot/bake-action) in your GitHub Actions workflows to leverage `depot bake` for building your bake files with our [Docker build service](/products/container-builds):

```yaml

name: Build images

on: push

jobs:

bake:

runs-on: ubuntu-latest

permissions:

id-token: write

contents: read

steps:

- uses: actions/checkout@v4

- uses: depot/setup-action@v1

- uses: depot/bake-action@v1

with:

file: docker-bake.hcl

push: true

```

## Tips and best practices

1. **Use groups** to organize related targets and build them together

2. **Leverage inheritance** with `inherits` to reduce duplication

3. **Use contexts** for shared base images to maximize deduplication

4. **Set platforms explicitly** to ensure consistent multi-platform builds

5. **Use variables** for configuration that changes between environments

6. **Use multiple Depot projects** to shard builds across different BuildKit hosts for resource intensive builds

7. **Save to ephemeral registry** in CI to build once and push after tests

## Next steps

- Learn more about [BuildKit parallelization](/blog/buildkit-in-depth)

- Explore the [full bake syntax reference](/blog/buildx-bake-deep-dive)

- Check out how to get faster container builds with [`depot/bake-action`](/docs/container-builds/reference/github-actions)

## Docker

---

title: Docker

ogTitle: How to use Depot with your existing Docker commands

description: Use Depot with your existing Docker commands like docker build, docker buildx build, and docker compose build, with our depot configure-docker command.

---

## Running builds with Depot